Bar graphs are designed for categorical variables; yet they're commonly used to current steady information in laboratory research, animal studies, and human studies with small pattern sizes. Bar and line graphs of steady information are "visual tables" that sometimes show the mean and commonplace error or commonplace deviation . First, many alternative information distributions can result in the same bar or line graph . The full information may recommend completely different conclusions from the summary statistics . Second, further issues come up when bar graphs are used to point out paired or nonindependent knowledge . Bar graphs of paired data erroneously recommend that the teams being in contrast are impartial and provide no details about whether adjustments are consistent across people . Third, summarizing the information as mean and SE or SD often causes readers to wrongly infer that the information are normally distributed with no outliers. These statistics can distort knowledge for small pattern measurement research, in which outliers are frequent and there could be not enough data to evaluate the sample distribution. It is well-known that parametric methods have improved statistical power over non-parametric methods when all parametric mannequin assumptions are legitimate . However, in screening settings involving many components at a time, it is often impractical to find a single transformation that is universally optimal for all components. When study information don't meet the distributional assumptions of parametric methods, even after transformation, or when data contain non-interval scale measurements, a non-parametric context is extra appropriate. Such a context usually implies testing based on ranks or making use of data rank transformations previous to the application of a parametric test. Figure2 exhibits density plots for the normal and chi-squared distributed original information and rank-transformed to normality traits with equal and unequal variances. The 9 density teams refer to the 9 possible multi-locus genotypes for the causal SNP pair and are primarily based on a single replicate, so as to keep the entire sample size to 500 people. Cell 0-0 on row 1 and column 1 (cell 2-2 on row three and column 3) refers to homozygous most frequent allele people. The contribution of the epistatic variance to the trait variance is 10%. Other replicate information or assumptions about epistatic evidence give rise to comparable plots .

Rank-transformation to normality (cfr. panel B) effectively offers with multimodal knowledge distributions (cfr. panel A). Proposed information mining strategies for epistasis detection are seldom thoroughly discussed when it comes to their underlying assumptions and their effects on general energy or sort I error control. The authors didn't explicitly investigate the ability of their methodology when non-normal steady knowledge are involved in the context of epistasis screening. Previously, we commented on the benefits and disadvantages of GMDR-like strategies compared to MB-MDR (e.g., ). Based on these comments, we right here focused on MB-MDR while investigating the effects of model-violations on the performance of 2-locus multifactor dimensionality discount strategies for quantitative traits. Because they are based on fewer assumptions (e.g., they don't assume that the outcome is approximately usually distributed). The cost of fewer assumptions is that nonparametric exams are generally less highly effective than their parametric counterparts (i.e., when the choice is true, they may be much less prone to reject H0). We encourage investigators to consider the traits of their datasets, rather than counting on commonplace practices in the field, every time they current data. The best choice for small datasets is to show the total knowledge, as abstract statistics are solely significant if there are enough information to summarize. In 75% of the papers that we reviewed, the minimum pattern dimension for any group proven in a figure was between two and six. Univariate scatterplots are the solely option for displaying the distribution of the information in these small samples, as boxplots and histograms would be tough to interpret. This is very essential for investigators who use parametric analyses to check groups in small studies. One of the pioneer methods used within the context of dimensionality discount and gene-gene interplay detection is the Multifactor Dimensionality Reduction method, initially developed by Ritchie et al. . MDR offers a substitute for traditional regression-based approaches. The technique is model-free and non-parametric in the sense that it does not assume any particular genetic mannequin. One concern associated to the initial implementations of the MDR method was that some essential interactions could probably be missed as a outcome of pooling too many multilocus genotype courses collectively. Another concern was that the MDR method didn't facilitate making changes for lower-order genetic effects or confounding components.

Lastly, it was considerably disappointing that after computationally intensive cross-validation and permutation-based significance evaluation procedures only a single "best" epistasis model was proposed. Over the years, a number of makes an attempt have been made to further improve the MDR concepts of Ritchie et al. , see for example . However, an MDR-based methodology was needed that might deal with all the aforementioned points within a unified framework and would flexibly accommodate completely different study designs of related and unrelated people. Model-Based Multifactor Dimensionality Reduction (MB-MDR) originated as such a unified dimensionality reduction method. Like MDR, MB-MDR is an intrinsic non-parametric methodology, and thus avoids making onerous to confirm assumptions about genetic modes of inheritance. These drawbacks have been dealt with in subsequent C++ variations of the MB-MDR software program, adhering to the key rules of the MB-MDR strategy . Rank-based exams can be used to statistically take a look at the difference between the survival curves. These checks examine noticed and expected number of events at each time point throughout teams, under the null speculation that the survival functions are equal across teams. There are a number of versions of these rank-based exams, which differ within the weight given to each time level in the calculation of the test statistic. Two of the commonest rank-based tests seen in the literature are the log rank test, which gives every time point equal weight, and the Wilcoxon check, which weights every time point by the number of topics in danger. Based on this weight, the Wilcoxon test is extra delicate to differences between curves early within the follow-up, when extra subjects are in danger.

Other tests, just like the Peto-Prentice test, use weights in between these of the log rank and Wilcoxon tests. Rank-based tests are topic to the extra assumption that censoring is independent of group, and all are limited by little energy to detect differences between teams when survival curves cross. In statistical inference, or speculation testing, the traditional exams are referred to as parametric checks because they depend on the specification of a probability distribution apart from a set of free parameters. Parametric checks are mentioned to rely upon distributional assumptions. Nonparametric checks, then again, don't require any strict distributional assumptions. Even if the info are distributed usually, nonparametric strategies are sometimes nearly as powerful as parametric methods. Strong power will increase have been noticed when data were rank-transformed prior to MB-MDR testing with Student's t affiliation testing. The same is achieved by a percentile transformation , which – at the same time - preserves the relative magnitude of scores between teams in addition to within groups. Only for usually distributed information with equal variances, the best scenario for a t-test on authentic traits, a small power loss is noticed. Goh and Yap also concluded that rank-based transformation tends to improve power whatever the distribution. In common, as with traditional two group t-testing, deviations from normality seem to be more influential to the ability of an MB-MDR analysis with Student's t than deviations from homoscedasticity . The identical outcomes obtained for untransformed traits and standardized traits usually are not surprising as well. Standardization entails linearly reworking unique trait values using the overall trait mean and total commonplace deviation. Such a change does not affect the two-group t-tests inside MB-MDR.

If in any respect potential, you need to us parametric checks, as they tend to be extra correct. Parametric checks have larger statistical power, which suggests they're prone to find a true significant impact. Use nonparametric exams provided that you must (i.e. you understand that assumptions like normality are being violated). Nonparametric tests can carry out nicely with non-normal continuous data when you have a sufficiently massive pattern dimension (generally gadgets in each group). As for the article by Professor Norman, which I even have not learn, it's inaccurate to say that you simply can't use parametric strategies with non-normal information. Thanks to the central limit theorem, you ought to use parametric methods with non-normal knowledge when your pattern measurement is giant sufficient. The pattern size tips I current are primarily based on simulation studies that evaluate simulated check outcomes to known correct outcomes for varied distributions and pattern sizes. These research discover that when you satisfy these pattern measurement tips, the tests work accurately even with non-normal knowledge. However, in case you have non-normal data and a small sample measurement, you then might need to use a nonparametric take a look at, which I talk about in this article. The Wilcoxon rank sum check is an example of a nonparametric test. Nonparametric checks don't make assumptions in regards to the distribution of the variables that are being assessed. These exams usually evaluate the ranks of the observations or the medians across teams. Nonparametric statistics are often preferred to parametric tests when the pattern dimension is small and the data are skewed or comprise outliers.

In contrast, univariate scatterplots, box plots, and histograms allow readers to look at the info distribution. This approach enhances readers' understanding of revealed knowledge, whereas permitting readers to detect gross violations of any statistical assumptions. The elevated flexibility of univariate scatterplots also permits authors to convey study design info. In small pattern measurement research, scatterplots can easily be modified to distinguish between datasets that embody unbiased teams and those who embrace paired or matched knowledge . It is a typical myth that Kaplan-Meier curves can't be adjusted, and that is usually cited as a cause to make use of a parametric mannequin that may generate covariate-adjusted survival curves. A methodology has been developed, however, to create adjusted survival curves using inverse likelihood weighting . In the case of only one covariate, IPWs can be non-parametrically estimated and are equivalent to direct standardization of the survival curves to the study population. In the case of multiple covariates, semi- or absolutely parametric models must be used to estimate the weights, that are then used to create multiple-covariate adjusted survival curves. Advantages of this methodology are that it is not topic to the proportional hazards assumption, it can be used for time-varying covariates, and it can be used for continuous covariates. In terms of normality, it's not essentially a difficulty for the correlation coefficient itself but it is for the p-value. However, in some cases, the nature of the relationship would require you to use a unique sort of correlation, similar to Spearman correlation.

Fortunately, Pearson and Spearman correlation are sturdy to non-normal information when you have more than 25 paired observations. The confidence intervals for the Pearson's correlation coefficient remain sensitive to non-normality regardless of the sample measurement. The p-values for Spearman's correlation are even robust to non-normal data as a end result of it's a nonparametric methodology that makes use of ranks. Improved power can be obtained by pre-analysis information transformations. Internally, 2-group comparison tests are performed in order to assign a "label" to every multilocus genotype. This procedure naturally creates highly imbalanced groups, with potentially extreme circumstances of heteroscedasticity. This motivates our choice to continue working with MB-MDR analyses primarily based on Student's t testing to identify teams of multilocus genotypes with differential trait values, regardless of the underlying trait distribution. With outcomes such as these described above, nonparametric exams may be the solely approach to analyze these data. As described right here, nonparametric checks can also be comparatively simple to conduct. The Mood's Median Test is a nonparametric various to one-way evaluation of variance . Mood's Median tests the likelihood that the median values of two samples are equal and, subsequently, are drawn from the same population. Mood's Median only accounts for the variety of variates which are larger or smaller than the median worth and doesn't keep in mind their actual variations from the median. Hence, it's thought to be a less powerful different to the Kruskal-Wallis ANOVA. Nevertheless, it is more strong in cases where the dataset contains excessive outliers. While scatterplots prompt the reader to critically evaluate the statistical tests and the authors' interpretation of the information, bar graphs discourage the reader from excited about these points.

Placental endothelin 1 mRNA knowledge for four completely different groups of individuals is introduced in bar graphs exhibiting imply ± SE , or mean ± SD , and in a univariate scatterplot . Panel A (mean ± SE) suggests that the second group has greater values than the remaining groups; nonetheless, Panel B (mean ± SD) reveals that there is appreciable overlap between teams. Showing SE quite than SD magnifies the obvious visible variations between groups, and this is exacerbated by the reality that SE obscures any impact of unequal sample dimension. The scatterplot clearly exhibits that the sample sizes are small, group one has a much larger variance than the other teams, and there is an outlier in group three. These problems usually are not apparent within the bar graphs proven in Panels A and B. No such observation was beforehand made for dichotomous traits. For dichotomous traits, MB-MDR makes use of a rating check, in particular, the Pearson's chi-squared take a look at. This take a look at is understood to be affected by unbalanced knowledge, or sparse knowledge, as is the case for rare variants . However, these information artifacts, which question using giant pattern distributions for check statistics, are minimized by requiring a threshold sample dimension for a multilocus genotype combination. An clarification for the discrepancies noticed between theoretical results and practical functions could additionally be present in the way the null distribution for multiple testing is derived. It is usually forgotten that also permutation-based multiple testing corrective procedures make some assumptions. For instance, for the step-down maxT method as implemented in MB-MDR, the Family-Wise Error Rate is strongly managed supplied the idea of subset pivotality holds . Non-parametric approaches don't depend on assumptions in regards to the shape or type of parameters in the underlying inhabitants. In survival analysis, non-parametric approaches are used to explain the information by estimating the survival operate, S, together with the median and quartiles of survival time. Non-parametric approaches are often used as the first step in an analysis to generate unbiased descriptive statistics, and are sometimes used along side semi-parametric or parametric approaches. Nonparametric statistics sometimes makes use of information that is ordinal, that means it doesn't rely on numbers, but somewhat on a ranking or order of sorts. For instance, a survey conveying consumer preferences starting from prefer to dislike would be considered ordinal knowledge.

Nonparametric checks don't require that your knowledge follow the traditional distribution. They're also called distribution-free exams and may provide benefits in sure conditions. Typically, individuals who carry out statistical hypothesis exams are extra snug with parametric exams than nonparametric tests. Our simulation research focuses on pure epistasis fashions with various degrees of genetic influence on a quantitative trait. Conditional on a multilocus genotype, we think about quantitative trait distributions which are normal, chi-square or Student's t with fixed or non-constant phenotypic variances. All information are analyzed with MB-MDR utilizing the built-in Student's t-test for association, in addition to a novel MB-MDR implementation primarily based on Welch's t-test. Traits are both left untransformed or are transformed into new traits by way of logarithmic, standardization or rank-based transformations, prior to MB-MDR modeling. Applying a statistical technique implies identifying underlying assumptions and checking their validity in the particular context. One of those contexts is affiliation modeling for epistasis detection. Here, relying on the method used, violation of mannequin assumptions might result in elevated kind I error, energy loss, or biased parameter estimates. Remedial measures for violated underlying conditions or assumptions include knowledge transformation or selecting a extra relaxed modeling or testing strategy. Model-Based Multifactor Dimensionality Reduction (MB-MDR) for epistasis detection depends on affiliation testing between a trait and an element consisting of multilocus genotype info. For quantitative traits, the framework is essentially Analysis of Variance that decomposes the variability in the trait amongst the different factors.

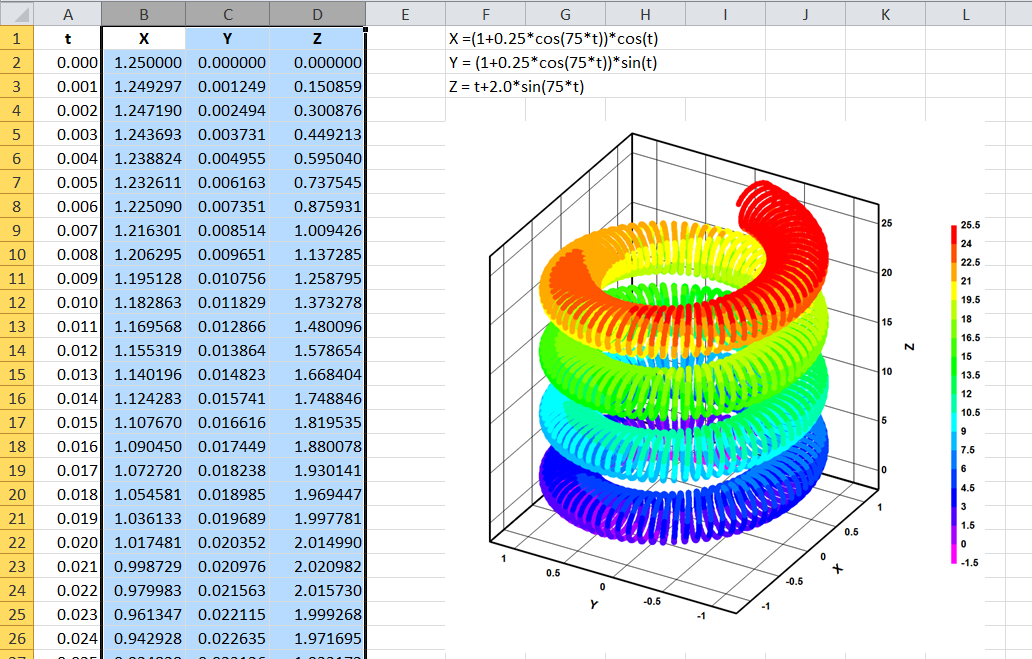

Calculating energy for nonparametric exams can be a bit difficult. For one thing, whereas nonparametric tests don't require explicit distributions, you should know the distribution to be able to calculate statistical power for these checks. I don't think many statistical packages have inbuilt analyses for this type of energy analysis. I've additionally heard of people utilizing bootstrap strategies or Monte Carlo simulations to provide you with an answer. For these methods, you'll nonetheless want either representative data or data in regards to the distribution. In this section we examine parametric equations and their graphs. In the two-dimensional coordinate system, parametric equations are useful for describing curves that aren't necessarily features. The parameter is an impartial variable that both \(x\) and \(y\) rely upon, and as the parameter increases, the values of \(x\) and \(y\) hint out a path along a airplane curve. For instance, if the parameter is \(t\) , then \(t\) might represent time. Then \(x\) and \(y\) are defined as functions of time, and \((x,y)\) can describe the place within the aircraft of a given object as it moves along a curved path. Inappropriately distributed data can lead to incorrectly high or low p-values. On the principle that organic knowledge hardly ever, if ever absolutely adjust to the assumptions of parametric tests, it's sometimes advocated that non-parametric exams ought to all the time be used.